Float8 (FP8) Quantized LightGlue in TensorRT with NVIDIA Model Optimizer: up to ~6× faster and ~68% smaller engines

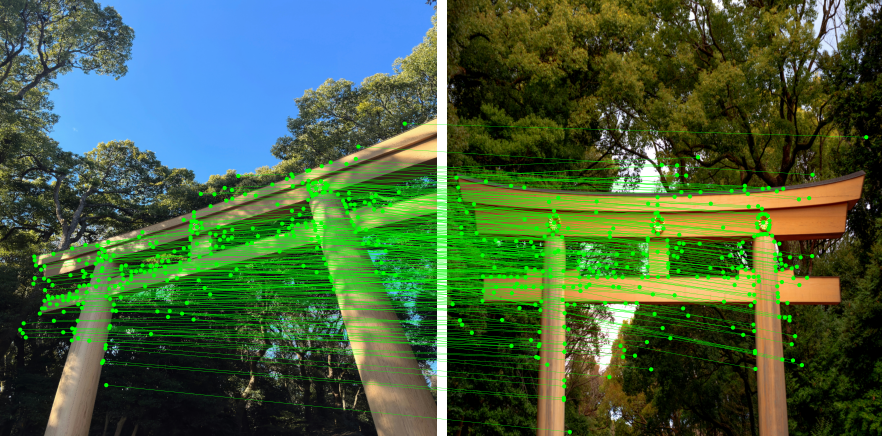

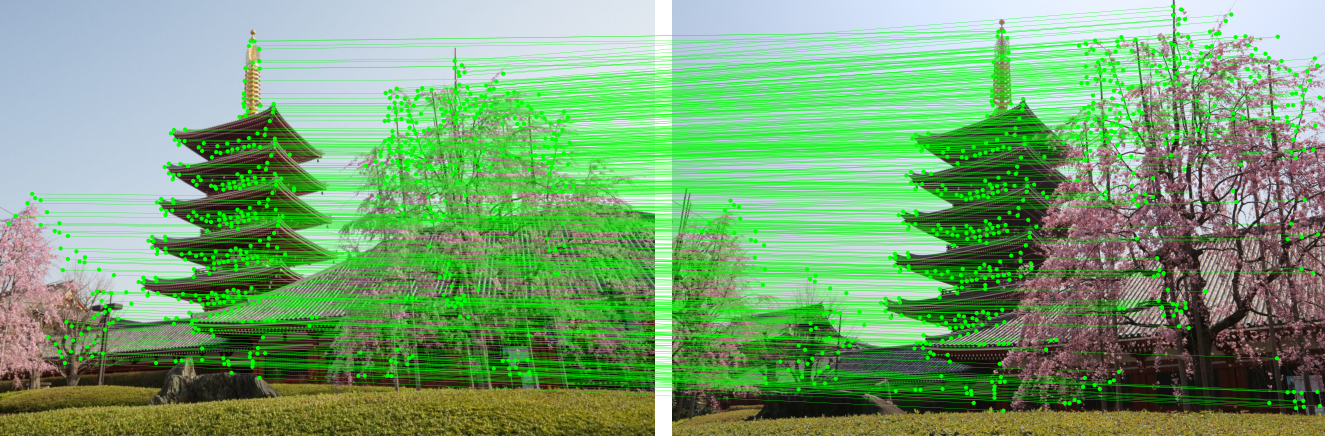

FP8 quantization via NVIDIA Model Optimizer shrinks TensorRT engines for SuperPoint + LightGlue and can cut latency versus FP32, with a visible match-quality...